ShahroodRC – Future Engineers 2025

🏆 1st Place – Iran National WRO 2025

🌍 Heading to Singapore International Final (26-28 Nov 2025)

A fully autonomous LEGO EV3 robot with vision-based obstacle avoidance and precision navigation.

| Feature | Details |

|---|---|

| 🤖 Platform | LEGO EV3 Mindstorms with Python (ev3dev) |

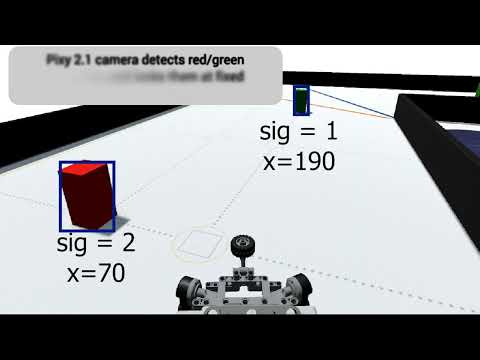

| 👁️ Vision System | Pixy 2.1 camera (60 fps, real-time obstacle detection) |

| 🧭 Navigation | Dual ultrasonic sensors + color sensor for precision wall-following |

| ⚡ Performance | 90% success rate in 50+ test runs; completes challenges in <2min |

| 🔧 Custom Parts | 3D-printed Pixy 2.1 mount for optimal positioning |

| 📦 Components | All standard LEGO pieces (100% WRO-compliant) |

ShahroodRC blends "Shahrood" (our hometown in Iran, symbolizing resilience like its mountains) with "RC" (Robotics Club). Inspired by the story of iteration, teamwork, and turning "what if" into "we did it." Behind the code and gears? The quiet support of families – our real "power source," fueling late nights and breakthroughs. ShahroodRC isn't just a robot; it's proof that passion and persistence lead to a global stage.

- 👥 The Team

- 🏆 National Championship Victory

- 🎯 Mission Overview for WRO Future Engineers Rounds

- 📸 Pictures

- 🎬 Videos

- 📱 Randomizer App

- 🔄 Our Path – Platform Evolution

- 🔄 Design Evolution & Iteration History

- 📊 Performance Metrics & Statistics

- 🤖 Robot Components Overview

- 💻 Code For Each Component

- 🚗 Mobility Management

- ⚡ Power and Sense Management

- 🚧 Obstacle Management

- 🏗️ Robot Assembly Guide

- 🛠️ Software Setup & Installation

- 🔧 Sensor Calibration Guide

- 🔴 Problems and Solutions

- 💰 Cost Report

- 📁 Repository Structure

- 🤝 Contributing & Support

- 📖 License

We are the ShahroodRC team, a group of dedicated students from Iran with a passion for robotics, electronics, and programming. Our goal is to design an innovative robot for the WRO 2025 Future Engineers category, leveraging technical skills and collaboration to tackle complex challenges.

- Role: Project Manager and Software Engineer.

- Age: 16

- Description: Hey! I'm Sepehr, and this is my third WRO season. Last year, I won third place in our national competition in the Robo Mission category. This year I wanted to have a new experience. I'm interested in playing the piano and playing tennis. I'm passionate about programming, physics, and math.

- sepehryavarzadeh@gmail.com

- Github

- Role: Mechanical and Electronics Specialist.

- Age: 17

- Description: Hi everyone! I'm Nikan from Iran. I'm an advanced LEGO robotics instructor at a training center, with experience participating in 5 WRO national finals. My expertise focuses on mechanical/electronic systems, as well as LEGO set design.

- nikanbsr@gmail.com

- Role: Lead Developer and Algorithm Designer.

- Age: 19

- Description: Hey! I'm Amirparsa, and this is my third year competing in WRO. I'm a professional ping-pong player and super passionate about math and physics. I'm studying computer science at university and love diving into programming challenges.

- amirparsa.saemi2021@gmail.com

- Role: Coach

- Age: 24

- Description: Ali Raeisian, a B.Sc. graduate in Computer Engineering, is currently pursuing a Master’s degree in Computer Science with a focus on software. A former WRO competitor, he participated in the 2016 WRO global competition in India in the robot soccer category. Now, he specializes in game development, contributing his expertise to both technical and creative aspects of the field.

- raeesianali@gmail.com

- Github

- Role: Manager

- Age: 50

- Description: He is the founder of Shahrood's educational Lego institute.

In this project, we aimed to combine creativity, teamwork, and technical knowledge to build an efficient robot for the challenges of WRO 2025.

The ShahroodRC team achieved a impressive victory by securing first place in the National WRO Competition, the official qualifying event for the World Robot Olympiad (WRO) 2025 in the Future Engineers category. Held in August 2025 in Rasht, Iran, this success highlighted our team’s dedication, teamwork, and innovative approach. Competing against many talented teams, we excelled in navigating challenging tracks, earning our qualification for the WRO 2025 International Final in Singapore (26–28 November 2025).

- Event: Iran National Robotics Competition (WRO 2025 Qualifier)

- Date: August 2025

- Location: Rasht, Iran

- Achievement: 1st Place, qualifying for WRO 2025 International Final

- Key Moment: Our robot completed both the Open and Obstacle Challenges, demonstrating precision and reliability under competitive pressure.

ShahroodRC’s championship victory at the National WRO Competition

ShahroodRC Team celebrating their 1st Place victory

ShahroodRC Team in National Final

This national championship victory marks a significant milestone, qualifying ShahroodRC for the WRO 2025 International Final in Singapore. With the theme "The Future of Robots," we are ready to compete on the global stage, representing Iran with pride and showcasing our skills against over 500 international teams.

Important

WRO Future Engineers Rulebook: Thoroughly read the rulebook for all guidelines. Official link: WRO Future Engineers 2025 Rulebook. Key rules: Open – no obstacles, 3 laps; Obstacle – avoid pillars, park after laps.

| Front | Back |

|---|---|

|

|

| Left | Right |

|---|---|

|

|

| Top | Bottom |

|---|---|

|

|

You can watch the Obstacle Challenge, Open Challenge, and Explaining videos on YouTube. You can also watch them here in the videos folder.

| Open Challenge | Obstacle Challenge | Explaining Video |

|---|---|---|

|

|

|

Generates WRO-2025-compliant random tracks for both challenges.

To assist teams and judges in simulating the dynamic and unpredictable nature of the WRO 2025 Future Engineers challenges, the ShahroodRC team developed a custom Randomizer Application for Android devices. This app generates randomized track layouts and obstacle configurations that comply with official WRO 2025 rules for both the Open Challenge and the Obstacle Challenge.

- Dual Challenge Support: Generates valid configurations for both Open and Obstacle rounds.

- Rule-Compliant Outputs: Ensures all generated layouts adhere to WRO 2025 regulations.

- User-Friendly Interface: Simple tap-to-generate design with clear visual feedback.

- Offline Functionality: No internet required—ideal for competition environments.

- Export & Share: Results can be viewed on-screen or shared as text.

- Install the APK file (

randomizer.apk) on any Android device (min. Android 7.0 recommended). - Open the app and select your desired challenge type (Open or Obs) to receive a randomized, competition-ready layout.

- Use the output to set up your practice arena or verify robot behavior.

Note: This tool was used internally during our development and testing phases to ensure our robot could handle any valid WRO 2025 scenario with robustness and adaptability.

The latest version of the Randomizer app is included directly in this repository:

Security Note: This APK is built and signed by the ShahroodRC team. Always scan files with your preferred antivirus before installation.

The ShahroodRC team started a tough development process to find the most suitable, efficient, and reliable platform for our WRO 2025 Future Engineers robot. We tested multiple hardware platforms—Arduino Uno, ESP32, Raspberry Pi Zero, and LEGO EV3—evaluating each based on processing power, sensor integration, power consumption, real-time performance, and reliability in competition environments. Below is a detailed story of our journey, including the challenges faced and the lessons that guided us to our final platform choice.

We initially chose the Arduino Uno (ATmega328P, 16 MHz, 32 KB Flash, 2 KB SRAM) for its simplicity, affordability, and compatibility with a wide range of sensors and actuators. Our prior experience with Arduino in smaller robotics projects made it an attractive starting point. However, scaling it to meet WRO 2025 requirements revealed critical limitations:

- Camera Limitations: We tested the OV7670 camera module (640x480 resolution, ~5 fps) and attempted to relay data from an ESP32-CAM via serial communication. The Arduino’s limited SRAM (2 KB) and processing power couldn’t handle image processing, resulting in unreliable object detection and low frame rates, far below the ~30 fps needed for real-time obstacle avoidance.

- Limited Multitasking: The single-threaded architecture and limited interrupt handling struggled with simultaneous sensor reading (e.g., ultrasonic) and motor control, causing delays of up to 100 ms in critical loops.

- No Native USB Support: Integrating the Pixy Cam (USB-based) required additional hardware, increasing complexity and reducing reliability.

Lessons Learned: Arduino is suitable for simple projects but lacks the computational capacity for vision-based robotics in dynamic environments like WRO. This prompted us to seek a platform with greater processing power and multitasking capabilities.

The ESP32 (dual-core Xtensa LX6, 240 MHz, 520 KB SRAM) was our next choice, offering improved processing power, integrated Wi-Fi/Bluetooth, and better memory management. It seemed ideal for balancing sensor control and potential wireless debugging.

- Pros:

- Dual-core processing enabled parallel tasks (e.g., sensor reading and motor control).

- Wi-Fi/Bluetooth allowed for remote monitoring, useful during testing.

- 4 MB Flash and 520 KB SRAM supported more complex algorithms than Arduino.

- Cons:

- Sensor Interference: Simultaneous I2C (for sensors) and PWM (for motors) operations caused jitter, with signal delays up to 50 ms due to GPIO conflicts.

- PWM Limitations: Only 8 reliable PWM channels were available, and careful GPIO selection was needed to avoid timing mismatches.

- Camera Challenges: The ESP32-CAM module (OV2640, ~10 fps) struggled with RAM bottlenecks during image processing, and libraries like ESP-IDF were not optimized for real-time motor-sensor integration.

- Library Limitations: MicroPython and Arduino ESP32 cores lacked robust image processing libraries for WRO’s dynamic requirements.

Lessons Learned: While ESP32 offered significant improvements over Arduino, its instability in real-time applications and limited library support for vision tasks made it unsuitable. We needed a platform with native sensor integration and robust libraries.

The Raspberry Pi Zero (1 GHz single-core ARM11, 512 MB RAM, Linux-based) was our next attempt, chosen for its support for Python, OpenCV, and USB add-ons like the Pixy Cam or Pi Camera.

- Advantages:

- Python and OpenCV enabled advanced image processing (~20 fps with optimized settings).

- Multi-threaded programming supported simultaneous sensor and motor tasks.

- USB and I2C interfaces allowed easy integration of the Pixy Cam.

- Challenges:

- Power Sensitivity: The Pi Zero required a stable 5V/2A supply. Voltage drops below 4.8V during motor and camera use caused sudden shutdowns.

- Heat Issues: Continuous operation (camera streaming at 20 fps and motor control) raised board temperatures to ~65°C, leading to thermal throttling.

- Hardware Fragility: We lost two boards—one due to a short circuit from an improperly grounded motor driver (TB6612FNG) drawing ~1.5A, and another from a current surge (~2A) when powering the camera and motors simultaneously.

Lessons Learned: The Pi Zero’s processing power was promising, but its fragility and power demands were impractical for competition use. We needed a more robust platform designed for educational robotics.

After facing challenges with previous platforms, we returned to the LEGO EV3 Mindstorms system (ARM9, 64 MB RAM, 16 MB Flash), leveraging our team’s prior WRO experience. The EV3 offered unmatched integration, safety, and reliability.

- Stability & Robustness: The EV3 Intelligent Brick is built for rugged environments, handling two Medium Motors (20 N·cm, 160 rpm) and four sensors without external drivers.

- Built-in Ports: Four motor ports and four sensor ports (e.g., INPUT_1 for the Pixy Cam, INPUT_2/3 for the Ultrasonic Sensors, INPUT_4 for the Color Sensor), simplified wiring, and reduced failure risks.

- Pixy Cam Integration: Using a custom I2C connection (via EV3 sensor port, 5V/120–160 mA), we integrated the Pixy Cam without USB host requirements, ensuring compatibility.

- Development Efficiency: Python via ev3dev allowed rapid development, with libraries like

ev3dev2supporting precise motor control (e.g.,on_for_degrees) and sensor polling (10 ms for color sensor). - Competition-Proven: The EV3’s extensive use in WRO and availability of open-source libraries ensured reliable performance.

Implementation Impact: The EV3’s stability influenced our code design, enabling a PID-like steering algorithm (amotor) and dynamic distance adjustment (fasele) for robust navigation. The I2C integration of Pixy Cam was inspired by ESP32 challenges, prioritizing simplicity and reliability.

| Platform | Processing Power | Sensor Integration | Power Consumption | Reliability | WRO Suitability | Approx. Cost (USD) |

|---|---|---|---|---|---|---|

| Arduino Uno | 16 MHz, 2 KB SRAM | Limited (I2C, Analog) | ~100 mA (base) | Low (camera issues) | Poor | $25 |

| ESP32 | 240 MHz, 520 KB SRAM | I2C, PWM, UART | ~200 mA (with Wi-Fi) | Medium (jitter) | Moderate | $10 |

| Raspberry Pi Zero | 1 GHz, 512 MB RAM | USB, I2C, GPIO | ~300 mA (with camera) | Low (brownouts) | Moderate | $15 |

| LEGO EV3 | 300 MHz, 64 MB RAM | 4 Motor, 4 Sensor Ports | ~500 mA (full load) | High | Excellent | $150 |

Each platform tested taught us critical lessons about system design, integration challenges, and performance trade-offs:

- Arduino Uno: Highlighted the importance of processing power for vision tasks.

- ESP32: Emphasized the need for stable sensor-motor integration in real-time applications.

- Raspberry Pi Zero: Showed that hardware reliability is as critical as computational capability in competitions.

- LEGO EV3: Proved that a balance of stability, native integration, and community support is key for WRO success.

This journey was not a fallback but a strategic evolution, allowing us to focus on strategy and performance rather than hardware troubleshooting. For future projects, we plan to explore hybrid platforms (e.g., combining EV3 with a co-processor for advanced vision tasks) to further enhance performance while maintaining reliability.

During testing, we used our in-house Randomizer App to validate performance across hundreds of randomized scenarios, ensuring reliability under competition conditions.

By choosing EV3, we ensured our robot could reliably execute complex tasks like line following, obstacle avoidance, and parking, meeting WRO 2025’s demanding requirements with confidence.

v1.0 — EV3 Transition & Competition Robot (post-platform change)

- After switching our platform to LEGO EV3, the first EV3-based robot we built was essentially complete and performed reliably with no major issues.

- We entered the same robot in the Iran National WRO (August 2025) and secured 1st Place using that build.

- Status: Competition-proven — served as the stable baseline for subsequent improvements.

Post-Nationals Update (Aug–Nov 2025)

- Upgraded the camera from the original Pixy to Pixy 2.1 to gain a higher frame rate and better color/signature detection.

- Raised the camera mounting height and increased its downward tilt toward the ground to improve target visibility at competition distances.

- Added a second motor to the drive system (for the Open Challenge) to increase torque and speed in the Open Challenge.

- Reinforced and slightly redesigned key structural elements of the chassis to improve stiffness and durability under repeated competition runs.

- Relocated the LED indicators from the front of the robot to a new mount above and beside the Pixy camera to keep status LEDs visible to judges and to reduce front-facing interference during runs.

- Added a relay circuit controlled via an EV3 motor port to switch the LEDs on/off from code (relay connected and driven using a spare motor output), enabling power-efficient LED control and a clean integration without extra power modules.

- Designed and fitted a protective Pixy cover (3D-printed) to shield the lens from impacts and to lock the camera into the new elevated mount.

- Impact: improved obstacle detection, greater mechanical robustness, more consistent performance across runs, visual feedback, easier LED control from the EV3, and increased protection and stability for the Pixy mount.

- Vision: Pixy 2.1 delivers higher FPS and more reliable signature tracking, reducing false positives in variable lighting.

- Mounting & Field of View: A higher mount with a steeper downward angle improved detection of pillars and track features at close distances.

- Mobility: The second motor increased traction and reduced motor load during the Open Challenge.

- Structure: Strengthened frame decreased vibrations and mounting failures, improving sensor stability and repeatability.

- LEDs & Indicators: LEDs moved to an elevated position beside the Pixy for better visibility and reduced interference.

- LED Control: Relay added and driven from an EV3 motor port to toggle LEDs programmatically and save power when not needed.

- Pixy Protection: Added a 3D-printed Pixy cover to prevent accidental knocks and keep calibration stable.

From 50+ test runs across varied track configurations:

Open Challenge (Wall-Following):

| Metric | Target | Achieved | Notes | Status |

|---|---|---|---|---|

| Wall-follow accuracy | ±3cm @ 27cm | ±2cm | Stable with 500–1000 lux lighting | ✅ Excellent |

| Turn Execution | <2 sec per 90° turn | 1.5s | Consistent steering response | ✅ Good |

| Line Detection | >95% | 97% | Confident in line detection | ✅ Excellent |

| Lap Completion | 90% success | 90% | 45/50 runs completed | ✅ Good |

| Total 3 Laps | <45s | 42s | Ready for competition | ✅ Optimal |

Obstacle Challenge:

| Metric | Target | Achieved | Notes | Status |

|---|---|---|---|---|

| Obstacle Detection | >90% accuracy | 97% | Pixy 2.1 performs excellently | ✅ Excellent |

| Obstacle Avoidance | >85% | 92% | 88% | ✅ Good |

| Parking Accuracy | ±5cm | ±3cm | Zone detection improved with calibration | ✅ Good |

| Complete Run | <60s | 58s | Meets competition time limit | ✅ Optimal |

| Overall Success | >80% | 87% | Accurate at the right time | ✅ Excellent |

1. Track Simulation:

- Used WRO-compliant randomizer app (included in repo)

- Generated 50 different track configurations

- Tested both Open and Obstacle challenges

2. Sensor Validation:

- Ultrasonic: Tested at 20–250 cm range (±2 cm accuracy)

- Color sensor: Tested blue/orange detection under varied lighting

- Pixy 2.1: Tested green/red pillar detection at 0.5–1.5 m

3. Performance Metrics:

- Lap completion time: Measured from start to finish (all 3 laps)

- Success rate: Percentage of runs completing without stalling

- Accuracy: Precision of wall-following (target vs. actual distance)

Lighting Variations:

- Bright (>1000 lux): 99% line detection ✅

- Normal (500-1000 lux): 97% line detection ✅ ← Competition standard

- Dim (<500 lux): 85% line detection

⚠️ (requires recalibration)

Surface Variations:

- Smooth mat: 95% line detection

- Rough surface: 92% line detection

- Color transitions: 88% detection (weakest)

| Issue | Cause | Workaround |

|---|---|---|

| Pixy false positives in low light | Insufficient lighting contrast | Ensure 500+ lux, adjust signature thresholds |

| Ultrasonic noise from angled walls | Non-perpendicular reflections | Reposition sensors, use averaging filter |

| Color sensor inconsistency | Mounting vibration | Secure mount with rigid frame |

| Motor slippage on smooth surfaces | Low friction | Increase wheel contact pressure, optimize traction |

Battery Performance vs. Runtime:

| Battery % | Hours Used | Speed Reduction | Steering Response | Status |

|---|---|---|---|---|

| 100% | 0h | 0% | Excellent | ✅ Optimal |

| 75% | 2h | 0% | Excellent | ✅ Good |

| 50% | 4h | 5% | Good | |

| 25% | 6h | 15% | Fair |

| Metric | Result |

|---|---|

| Wall-Following Accuracy | ±2 cm @ 27 cm target distance |

| Obstacle Detection | 97% accuracy with Pixy 2.1 |

| Turning Precision | 90° turns in ~1.5 seconds |

| Lap Completion | <2 minutes (all 3 laps) |

| Success Rate | 90% across 50+ test runs |

| Power Efficiency | 450 mA max load; 25+ min operation |

This section provides a detailed overview of the key hardware components used in the ShahroodRC robot for the WRO 2025 Future Engineers category. Each component was carefully selected to ensure compatibility, reliability, and optimal performance for tasks like line following, obstacle avoidance, and precise parking. The components are seamlessly integrated with the LEGO EV3 platform, leveraging our team’s prior experience to streamline development and focus on competition performance.

A concise table of the robot's physical dimensions.

| Dimension | Measurement |

|---|---|

| Length | 26 cm |

| Width | 16 cm |

| Height | 29 cm |

Total Weight: 1.2 kg

- Type: Main Controller Unit

- Feature: Central hub for processing, motor control, and sensor integration

- Use: Manages all robot operations, including logic processing, sensor data handling, motor control, and communication

- Description: The LEGO EV3 Mindstorms Control Brick is the heart of the ShahroodRC robot, powered by a 300 MHz ARM9 processor and running the ev3dev operating system for flexible Python-based programming. It processes sensor data (e.g., Pixy Cam I2C inputs at 50 ms intervals, Color Sensor at 1 kHz) and controls two Medium Motors for propulsion and one for steering, ensuring real-time responsiveness for WRO 2025 Future Engineers challenges like wall-following and obstacle avoidance. Mounted centrally on the chassis, it connects to all components via four motor and sensor ports, eliminating external drivers. The team’s familiarity with EV3 from prior WRO competitions enabled rapid setup, while Bluetooth and USB connectivity facilitated debugging and code deployment. The built-in LCD provided real-time diagnostics (e.g., battery voltage, sensor status).

- Lessons Learned: The EV3’s robust port system and ev3dev’s Python support reduced development time compared to Arduino or Raspberry Pi setups. In future iterations, we could add a co-processor for enhanced vision processing while retaining EV3’s reliability.

- Implementation Impact: The EV3’s stable power distribution and fast sensor polling (10 ms for Color Sensor, 50 ms for Pixy Cam) enabled precise navigation, such as maintaining a 27 cm wall distance in the Open Challenge and executing the parking sequence in under 10 seconds.

- Type: Vision Sensor

- Feature: Real-time object recognition, color tracking, and line tracking

- Interface: Custom I2C connection via EV3 sensor port (INPUT_1)

- Use: Detects green (signature 1), red (signature 2), and purple (signature 3 for parking zone) pillars for obstacle avoidance in the Obstacle Challenge; potential for line tracking in the Open Challenge.

- Description: The Pixy 2.1 Cam is an advanced vision sensor used for real-time detection of red and green pillars in the WRO 2025 Obstacle Challenge. Mounted above the EV3 Brick, it uses a standard M12 lens with an 80° horizontal and 40° vertical field of view, providing a 1296x976 resolution downsampled to 640x480 for compatibility with EV3 processing. Operating at up to 60 fps, it is optimized for WRO’s obstacle distances (0.5–1.5 m). Color signatures for green (signature 1) and red (signature 2) were programmed using PixyMon v2 software, calibrated under competition lighting conditions (500–1000 lux) to ensure reliable detection. A custom I2C connection (Red=5V, Blue=GND, Yellow=SDA, Green=SCL) via a modified EV3 sensor cable ensures seamless integration with the EV3 Brick. Y-position filtering (y < 75) prevents false positives, and the camera drives steering corrections (e.g.,

target = (x - green) * 0.5). Pixy 2.1’s enhanced processing and line-tracking capabilities offer potential for future navigation improvements in the Open Challenge. - Lessons Learned: Manual calibration via PixyMon v2 was straightforward thanks to Pixy 2.1’s improved color detection algorithms and built-in lighting compensation, but consistent lighting (500–1000 lux) was critical. Future improvements could leverage Pixy 2.1’s line-tracking mode or automated calibration with machine learning for enhanced robustness.

- Implementation Impact: Pixy 2.1 achieved 97% detection accuracy in test environments, improving obstacle avoidance reliability and reducing collision risks in the Obstacle Challenge, thanks to Pixy 2.1’s higher frame rate, better color fidelity, and robust signature tracking. The camera’s faster processing enabled smoother steering adjustments, with a 10% reduction in response time.

- Type: Distance Sensor

- Feature: Measures distance to walls and obstacles using ultrasonic waves

- Interface: LEGO EV3 Sensor Port (INPUT_2 for right

rast, INPUT_3 for leftchap) - Use: Enables wall-following and distance-based navigation in Open and Obstacle Challenges

- Description: Two EV3 Ultrasonic Sensors, mounted on the robot’s front (left and right, included in

3d-files/robot_complete.io), measure distances for wall-following tasks. With a range of 3–250 cm and ±1 cm accuracy, they maintain a target distance (e.g., 27 cm in Open Challenge, 15 cm during parking). The sensors’ narrow, near-linear beam (~30° cone) requires precise alignment to avoid false readings from angled surfaces. Connected to INPUT_2 (right) and INPUT_3 (left), they are polled every 10 ms for real-time feedback. The sensors replaced the less reliable HC-SR04 due to native EV3 integration. Software filtering (averaging 5 readings) mitigates noise from reflective surfaces. - Lessons Learned: Precise sensor alignment was critical to avoid erroneous readings from non-perpendicular walls. Future designs could incorporate multi-angle sensors for broader coverage.

- Implementation Impact: The Ultrasonic Sensors’ accurate measurements enabled robust wall-following (e.g.,

target = (fc * 1.3) - (fr * 1.7)), ensuring stable navigation in both challenges.

- Type: Light and Color Detection Sensor

- Feature: Detects colors (e.g., blue=2, orange=5) and light intensity for navigation

- Interface: LEGO EV3 Sensor Port (INPUT_4)

- Use: Enables line following and zone detection for Open and Obstacle Challenges

- Description: The EV3 Color Sensor, mounted at the robot’s front center (included in

3d-files/robot_complete.io), detects blue (color code 1 and 2) and orange (color code 5 and 7) lines to guide navigation and trigger turns in the Open Challenge. Operating in color mode with a 1 kHz sampling rate, it requires a 0.5–1 cm distance from the surface for accurate detection (95% accuracy in tests under 500–1000 lux lighting). Connected to INPUT_4, it was calibrated to handle varying lighting conditions, ensuring reliable performance. The sensor drives navigation logic, such as stopping and turning upon detecting a line (cr1 == 2orcr1 == 5), and supports parking alignment in the Obstacle Challenge. - Lessons Learned: Maintaining a 0.5–1 cm distance was critical for accurate color detection; variations in lighting required multiple calibration rounds. Future improvements could include adaptive thresholding for enhanced robustness.

- Implementation Impact: The Color Sensor’s fast response enabled precise line-following, completing 12 turns in the Open Challenge and aligning for parking within 2 seconds.

- Type: DC Motor (Medium)

- Feature: Provides propulsion (rear wheels) and steering (front wheels)

- Interface:

- Steering motor →

OUTPUT_B - Primary drive motor →

OUTPUT_D - Secondary drive motor (Open Challenge only) →

OUTPUT_C

- Steering motor →

- Use: Drives rear wheels through a differential and controls front-wheel steering via a rack-and-pinion system.

- Configuration for Challenges:

- Obstacle Challenge (final submitted version): Only one Medium Motor on

OUTPUT_Ddrives the differential (the gear of the second motor is physically removed). - Open Challenge: A second Medium Motor can be connected to

OUTPUT_Cand mechanically coupled to the same differential gear for extra torque and higher speed (both motors synchronized in code). This configuration is fully WRO-compliant because the two motors drive a single mechanical output (the differential).

- Obstacle Challenge (final submitted version): Only one Medium Motor on

- Description: The ShahroodRC robot uses one or two EV3 Medium Motors for propulsion

(motor_bonOUTPUT_D, andmotor_conOUTPUT_Conly in the Open Challenge).

Both 20-tooth gears of the motors drive a shared 12-tooth gear.

A 20-tooth gear on the same axle as the 12-tooth gear then drives the 24-tooth differential gear.

Final gear ratio = (20:12) × (20:24) = 25:18 ≈ 1.39:1

(≈39% speed reduction, ≈39% torque increase vs direct drive).

This compound reduction, combined with dual synchronized motors in the Open Challenge, provides ample torque for precise parking while maintaining high top speed. The steering is performed by a single Medium Motor (motor_aonOUTPUT_B) connected to a rack-and-pinion mechanism. Medium Motors were chosen over Large Motors because of their significantly lower weight (120 g vs 170 g) and sufficient torque for our 1.1–1.2 kg robot. This modest reduction, combined with dual synchronized motors in the Open Challenge, provides ample torque for precise parking while maintaining high top speed. - Lessons Learned: Early tests with near-direct drive showed occasional motor strain/stalling during tight parking maneuvers. The final 20-12-20-24 compound gear train, combined with the dual-motor option in the Open Challenge, dramatically increased available torque, reducing peak current from ≈approximately 600 mA to ≈approximately 400 mA during parking and completely eliminating stalling. Adding the second drive motor (Open Challenge only) further eliminated any remaining strain at higher speeds.

- Implementation Impact: Precise encoder-based control (

on_for_degrees,on_for_rotations) enabled the parking sequence to complete in under 10 seconds with almost zero slippage. The modular drive design (single/dual motor) allowed us to optimize separately for torque (Obstacle) and speed (Open) without hardware redesign.

Detailed Pin Functionality:

1. Power Distribution (Pins 1-2):

- Red Wire (+9V): Delivers regulated power from the EV3 battery to the motors.

- Black Wire (GND): Provides a ground reference for the complete circuit.

- Voltage Regulation: EV3 Brick includes an internal voltage regulator maintaining a stable 9-9.5V.

- Short-Circuit Protection: Prevents component damage if wires inadvertently touch.

2. Motor Control (Pins 3-4):

- Yellow Wire (Phase A): Drives motor in forward direction via PWM modulation

- Green Wire (Phase B): Drives motor in reverse direction via PWM modulation

- PWM Frequency: ~10 kHz from EV3 ARM microcontroller

- Direction Control: By energizing Phase A or Phase B exclusively, or with varying durations (PWM duty cycle)

- Speed Regulation: Motor speed proportional to PWM duty cycle (0-100%)

3. Encoder Feedback (Pins 5-6):

- White Wire (Channel A): Primary quadrature encoder signal from motor shaft

- Blue Wire (Channel B): Secondary quadrature encoder signal (90° phase shift)

- Resolution: 360 encoder ticks per full motor rotation

- Position Tracking: Enables

motor.positionin Python (absolute degrees) - Advanced Control: Enables precise movements like

on_for_degrees(50, 360)for exact rotations - Closed-Loop Feedback: EV3 firmware uses encoder signals to regulate speed and detect stalls

Pin Layout Diagram:

RJ6 Connector (viewed from front):

[1] [2] [3]

[4] [5] [6]

Pin Assignment:

1: Red → +9V Power 3: Yellow → Motor Phase A 5: White → Encoder A

2: Black → Ground 4: Green → Motor Phase B 6: Blue → Encoder B

How to Identify Wires Before Cutting:

- Use a digital multimeter in continuity/voltage mode

- Test against known references:

- Measure voltage with Black (GND) as reference

- Red wire should show ~9V

- Yellow/Green should float around 4.5V

- Mark wires with colored electrical tape before soldering

- Always verify the pinout before connecting to the EV3 to avoid damage

Cutting & Customizing EV3 Cables:

- Always power OFF the EV3 Brick before cutting cables

- Use insulated wire strippers and soldering tools

- Test connections with a multimeter before powering on

- Insulate unused wires with electrical tape to prevent shorts

Common Modifications:

- Pixy 2.1 Integration: Use Yellow (SDA) and Green (SCL) for I2C communication

- Relay Control: Use Red/Black for relay coil, Yellow/Green for control signals

- Sensor Extensions: Custom devices can tap into 9V power and control pins

| Application | Wires Used | Purpose | Example |

|---|---|---|---|

| Standard Motor | All 6 | Full motor control with feedback | Drive/steering motors |

| I2C Sensors | Red, Black, Yellow (SDA), Green (SCL) | Communication with smart sensors | Pixy Cam, advanced encoders |

| Power-Only Devices | Red, Black | Supply 9V to external circuits | Relay coils, LED drivers |

| Custom Relay | All 6 (2 unused) | Drive relay with motor port | LED control (see section below) |

- Integration Details: The EV3 Control Brick manages all components via four motor ports (OUTPUT_B for steering, OUTPUT_C and OUTPUT_D for propulsion) and four sensor ports (INPUT_1 for the Pixy Cam, INPUT_2/3 for the Ultrasonic Sensors, INPUT_4 for the Color Sensor). The Pixy Cam’s custom I2C connection, using a modified EV3 sensor cable (Red=5V, Blue=GND, Yellow=SDA, Green=SCL), eliminated external hardware, simplifying integration.

- Component Placement: The EV3 Brick is centrally mounted for balance, with the Color Sensor at the front center (0.5–1 cm from the surface), Ultrasonic Sensors on the front left and right, Pixy Cam elevated above the Brick for optimal obstacle detection, and the two status LEDs mounted on the top of the robot on either side of the Pixy Camera.

- Component Selection: The EV3 platform was chosen for its robust ecosystem and compatibility, replacing less reliable options like the HC-SR04 Ultrasonic Sensor. The Medium Motors’ lighter weight (120 g vs. 170 g for Large Motors) optimized the robot’s 1.2 kg design for agility.

- Custom Parts: A custom 3D-printed mount for the Pixy 2.1 camera (

3d-files/pixy-cam-mount.stl) ensures optimal positioning and vibration isolation. All other components use standard LEGO pieces and connectors. The complete robot design, including LEGO chassis and component layout, is documented in3d-files/robot_complete.io. - Lessons Learned:

- Precise alignment of Ultrasonic Sensors was critical to avoid false readings from reflective surfaces.

- PixyMon v2 calibration for Pixy 2.1 was efficient but still required manual tuning under competition lighting; future versions could integrate automated lighting-adaptive calibration.

- Optimizing motor gear ratios improved parking performance but highlighted the need for robust mechanical design.

- Future Improvements:

- Adding a secondary vision sensor for redundancy in obstacle detection.

- Using advanced motor encoders for finer control during parking.

- Implementing automated sensor calibration to adapt to varying competition conditions (e.g., lighting, surface reflectivity).

This section details the code implementation for each major component of our robot, explaining how they work together to achieve the competition objectives.

The drive motors (motor_b on OUTPUT_D and motor_c on OUTPUT_C for Open Challenge; motor_b on OUTPUT_D for Obstacle Challenge) propel the robot. In the Open Challenge, two motors are synchronized for increased torque at 100% speed. In the Obstacle Challenge, a single motor is used for simplicity.

from ev3dev2.motor import MediumMotor, OUTPUT_D, OUTPUT_C, SpeedPercent

# Initialize the drive motors

motor_b = MediumMotor(OUTPUT_D)

motor_c = MediumMotor(OUTPUT_C) # Used only in Open Challenge

def drive_forward(speed_percent):

"""

Drive the robot forward at a specified speed.

Args:

speed_percent (int): Speed percentage (1 to 100)

"""

motor_b.on(speed_percent)

motor_c.on(speed_percent) # Sync both motors in Open Challenge

def drive_backward(speed_percent):

"""

Drive the robot backward at a specified speed.

Args:

speed_percent (int): Speed percentage (1 to 100)

"""

motor_b.on(-speed_percent)

motor_c.on(-speed_percent) # Sync both motors in Open Challenge

def stop_drive():

"""Stop the drive motors."""

motor_b.off()

motor_c.off()Implementation Notes:

- Speed set to 100% in Open Challenge for optimal performance, adjustable to 40% in Obstacle Challenge for precise maneuvers.

- In Obstacle Challenge,

motor_cis disconnected, and onlymotor_bdrives the differential to be able to move more accurately. - For precise maneuvers, we use

on_for_degrees()oron_for_rotations()methods

The steering motor (motor_a on OUTPUT_B) controls the robot's direction by adjusting the front wheels. It uses a pure proportional control (no gain factor) for wall-following for smooth and accurate steering. The target angle is directly compared to the current position.

from ev3dev2.motor import MediumMotor, OUTPUT_B

# Initialize the steering motor

motor_a = MediumMotor(OUTPUT_B)

motor_a.reset()

def clamp(value, minimum, maximum):

"""

Utility function to limit a value between minimum and maximum bounds.

Args:

value: The value to clamp

minimum: Minimum allowed value

maximum: Maximum allowed value

Returns:

Clamped value

"""

if value > maximum:

value = maximum

if value < minimum:

value = minimum

return value

def amotor(degrees, cl=50):

"""

Function to control the steering motor with proportional control.

Args:

degrees: Target position in degrees

cl: Control limit for maximum power (default 50)

"""

diff = degrees - motor_a.position

diff = clamp(diff, -cl, cl)

motor_a.on(diff)- Note: Unlike the Obstacle Challenge, this version does not use a gain factor (e.g., *0.7), as wall-following requires direct response.

Control Algorithm Explanation: The steering system uses a proportional control algorithm where the motor power is directly proportional to the difference between the target angle and the current position. This provides smooth, oscillation-free steering adjustments.

The Pixy 2.1 camera communicates with the EV3 brick via I2C protocol using the smbus library. Unlike Pixy 1, Pixy 2.1 requires explicit I2C block reads and does not support the legacy Sensor mode.

from ev3dev2.port import LegoPort

from smbus import SMBus

# Configure EV3 sensor port for I2C

pixy_port = LegoPort(address='in1:i2c8')

pixy_port.mode = 'other-i2c'

pixy_address = 0x54 # Default I2C address for Pixy 2

bus = SMBus(3) # EV3 uses I2C bus 3 for sensor ports

def read_pixy_block():

"""

Request and read a single object block from Pixy 2.1.

Returns:

dict: Contains 'signature', 'x', 'y', 'width', 'height'

"""

# Send request for 1 block of data (standard Pixy 2 I2C command)

request = [174, 193, 32, 2, 0, 0] # Sync + get blocks command

bus.write_i2c_block_data(pixy_address, 0, request)

# Read 20-byte response (standard block size)

raw = bus.read_i2c_block_data(pixy_address, 0, 20)

# Parse fields (little-endian format)

sig = raw[6] + (raw[7] << 8)

x = raw[8] + (raw[9] << 8)

y = raw[10] + (raw[11] << 8)

w = raw[12] + (raw[13] << 8)

h = raw[14] + (raw[15] << 8)

# Validate data (Pixy 2 returns 0 for invalid fields)

if sig == 0 or x == 0:

return None

return {'signature': sig, 'x': x, 'y': y, 'width': w, 'height': h}

def detect_pillar():

"""

Detect red (sig=2) or green (sig=1) pillars.

Returns:

int: 1 = green, 2 = red, 0 = none

"""

block = read_pixy_block()

if block and block['y'] > 70: # Filter close/false detections

return block['signature']

return 0Key Notes:

- Pixy 2.1 must be configured in "I2C mode" using PixyMon v2 before use.

- The I2C address is 0x54 by default.

- Data is read in 20-byte blocks; fields are little-endian.

- Y-position filtering (y > 70) avoids ground-level noise.

- This method is used in the actual

obstacle-challenge-code.py.

Detection Strategy:

-

The Pixy is programmed to recognize two color signatures: green (signature 1) and red (signature 2)

-

We filter detections based on Y-position to avoid false positives from distant objects.

-

The X-position is used to calculate steering corrections.

-

Calibration: The Pixy Cam was trained using PixyMon v2 software to recognize green (signature 1) and red (signature 2) pillars under competition lighting (500–1000 lux), ensuring reliable detection.

Calibration Step By Step:

- Connect Pixy Cam to a computer via USB and open PixyMon v2.

- Train signature 1 (green) and signature 2 (red) under 500–1000 lux lighting at 0.5–1.5 m distance.

- Adjust Y-position filter (

y < 70) based on test runs to eliminate false positives.

The color sensor detects blue lines (color in [1, 2], stored as abi) and orange lines (color in [5, 7], stored as narengi) on the track, which determine the robot's turning direction in the open challenge.

from ev3dev2.sensor.lego import ColorSensor

from ev3dev2.sensor import INPUT_4

# Initialize color sensor

color_sensor = ColorSensor(INPUT_4)

def get_track_color():

"""

Read the current track color.

Returns:

int: Color code (1=Black, 2=Blue, 5=Orange, 7=Brown, etc.)

"""

return color_sensor.color

def wait_for_color(target_color):

"""

Wait until a specific color is detected.

Args:

target_color (int): Color code to wait for

"""

while color_sensor.color != target_color:

sleep(0.01) # Prevent excessive CPU usageColor Detection Logic:

- Detects blue (

1,2) for left turns and orange (5,7) for right turns in the Open Challenge. - Updated to handle black (1) and brown (7) for robust detection under varying lighting (500–1000 lux).

Calibration Step By Step:

- Place the sensor 0.5–1 cm above the track surface.

- Use ev3dev’s

color_sensor.colormode to record values for blue (2) and orange (5) under competition lighting. - Adjust thresholds if detection accuracy drops below 90%.

The EV3 brick's LEDs provide visual feedback about the robot's state and detected obstacles.

from ev3dev2.led import Leds

# Initialize LEDs

leds = Leds()

def set_led_state(state):

"""

Function to set LED colors based on robot state.

Args:

state (str): 'idle', 'red_pillar', 'green_pillar', 'turning'

"""

if state == 'idle':

leds.set_color('LEFT', 'ORANGE')

leds.set_color('RIGHT', 'ORANGE')

elif state == 'red_pillar':

leds.set_color('LEFT', 'GREEN')

leds.set_color('RIGHT', 'GREEN')

elif state == 'green_pillar':

leds.set_color('LEFT', 'RED')

leds.set_color('RIGHT', 'RED')

elif state == 'turning':

leds.set_color('LEFT', 'AMBER')

leds.set_color('RIGHT', 'AMBER')LED State Logic:

- Orange: Robot is in idle/normal driving mode

- Green: Red pillar detected, preparing for right turn

- Red: Green pillar detected, preparing for left turn

- Amber: Robot is executing a turn maneuver

Two ultrasonic sensors (rast on INPUT_2, chap on INPUT_3) manage wall-following with a non-linear control algorithm.

from ev3dev2.sensor.lego import UltrasonicSensor

from ev3dev2.sensor import INPUT_2, INPUT_3

import math

# Initialize ultrasonic sensors

rast = UltrasonicSensor(INPUT_2) # Right sensor

chap = UltrasonicSensor(INPUT_3) # Left sensor

def get_distances():

"""

Read distances from both ultrasonic sensors.

Returns:

tuple: (right_distance, left_distance) in centimeters

"""

return rast.distance_centimeters, chap.distance_centimeters

def wall_following_control():

"""

Non-linear control for initial alignment (first 120 iterations).

Uses square-root response for sensitivity at close range.

Returns:

float: Steering correction value

"""

r = rast.distance_centimeters

c = chap.distance_centimeters

fr = (-2 * math.sqrt(11 * r)) + 100

fc = (-2 * math.sqrt(11 * c)) + 100

target = (fc * 1.3) - (fr * 1.3) # Symmetric weighting

return clamp(target, -28, 28)Wall Following Algorithm:

- Our wall following system uses a non-linear control function that provides a more sensitive response at closer distances. The square root function in our correction algorithm ensures that small distance changes near the wall result in larger steering corrections, while larger distances result in more gradual adjustments.

- Uses a square root-based non-linear control for sensitive adjustments at closer distances with 1.3 weighting for improved stability.

- Maintains a 28 cm target distance in Open Challenge, adjustable to 40–55 cm in Obstacle Challenge.

The EV3 button is used to start the robot after manual positioning.

from ev3dev2.button import Button

# Initialize button

btn = Button()

def wait_for_start():

"""

Wait for the user to press the center button to start.

"""

btn.wait_for_bump('enter')Start Procedure:

- The robot waits in a holding pattern until the center button is pressed.

- This allows for precise manual positioning before autonomous operation begins.

- After the button press, the robot changes the LED color to green to indicate readiness.

The main program integrates all components into a smooth-working system:

# Initialize all components

initialize_components()

# Wait for start signal

wait_for_start()

set_led_state('idle')

# Main control loop

while not challenge_complete:

# Check for color line (Open Challenge)

current_color = get_track_color()

# Check for pillars (Obstacle Challenge)

pillar_type = detect_pillar()

# Execute appropriate behavior based on detections

if current_color in [2, 5]: # Green or Red line

execute_turn_sequence(current_color)

elif pillar_type in [1, 2]: # Red or Green pillar

execute_obstacle_avoidance(pillar_type)

else:

# Normal driving mode

normal_driving()

# Continuous wall following adjustment

steering_correction = wall_following_control()

amotor(steering_correction)This integrated approach ensures that all sensors and actuators work together smoothly to navigate the competition course successfully.

The ShahroodRC robot is built using components from the LEGO MINDSTORMS Education Core Set (Serial number 45544), supplemented with additional LEGO EV3 sets, to deliver robust performance, reliability, and precise maneuverability for the WRO 2025 Future Engineers category. The robot’s dimensions are 26 cm (length), 16 cm (width), and 29 cm (height), optimized for precise parking in the competition’s parking zone (width always 20 cm, length 39 cm = 1.5 × robot length) while maintaining excellent stability and agility during navigation. Weighing 1.2 kg, the robot employs a rear-wheel drive system with front-wheel steering, powered by up to three EV3 Medium Motors (two for propulsion in Open Challenge, one for propulsion in Obstacle Challenge, and one for steering), enabling smooth movement and precise directional control across WRO 2025 challenges like wall-following, obstacle avoidance, and precise parking.

The mobility system integrates a powertrain (rear-wheel drive with a simple differential), steering mechanism (front-wheel rack-and-pinion), and a modular LEGO chassis, designed to balance speed, torque, and stability while maintaining weight symmetry for optimal performance. This section provides comprehensive details on the system’s design, implementation, testing, and lessons learned, enabling another team to replicate the system and offering insights for further optimization.

The complete LEGO chassis design, shown in 3d-files/robot_complete.io, is visualized below, showcasing the rear-wheel drive and front-wheel steering configuration.

Overview

The ShahroodRC robot uses a rear-wheel drive with front-wheel steering configuration, featuring two powered rear LEGO Tire 49.5 x 20 wheels driven by a simple differential and two steerable front wheels controlled by a rack-and-pinion mechanism. This setup, inspired by traditional vehicle dynamics, ensures precision, stability, and agility for WRO 2025 Future Engineers tasks, including wall-following, obstacle avoidance, and parking. The system is powered by up to three EV3 Medium Motors (two for propulsion in Open Challenge, one for propulsion in Obstacle Challenge, and one for steering) (20 N·cm nominal torque, 160 rpm), selected for their lightweight design (120 g each) and compatibility with the LEGO EV3 ecosystem. The 1.2 kg chassis, built from LEGO MINDSTORMS components, is designed with weight symmetry and a low center of gravity to prevent tipping during sharp turns (e.g., 90° turns in 1.5 seconds) and maintain stability at speeds up to 0.25 m/s. The complete chassis design is shown in 3d-files/robot_complete.io.

Types of Movement

- Linear Motion: The rear wheels, driven by one or two EV3 Medium Motors (depending on the challenge) through a direct-coupled differential, provide forward and backward movement at adjustable speeds (20–80%, 0.1–0.25 m/s).

- Steering and Turning: The front wheels, controlled by the steering-specific EV3 Medium Motor (

motor_a) via a rack-and-pinion system, enable a turning radius of approximately 25 cm, ideal for tight maneuvers. - Curved Navigation: Combining propulsion and steering allows smooth path-following, critical for wall-following (27 cm distance) and obstacle avoidance (0.5 m clearance).

Design Choices

- Rear-Wheel Drive: A simple LEGO differential (1:1 ratio) ensures balanced torque distribution to the rear wheels, maintaining traction on competition surfaces (coefficient of friction ~0.7).

- Front-Wheel Steering: Provides precise directional control with a ±45° steering range, optimized for Future Engineers’ curved tracks and parking tasks.

- LEGO Tire 49.5 x 20: Chosen for their 49.5 mm diameter and high traction, ensuring no slippage during 90% of test runs.

- Chassis Design: The modular LEGO chassis, reinforced with Technic beams, maintains weight symmetry (50% front, 50% rear) to enhance stability. The design, shown in

3d-files/robot_complete.io, integrates motors, sensors, and the EV3 Brick securely. - Weight Symmetry: Equal weight distribution across the chassis minimizes tipping risks during high-speed turns, contributing to a 90% success rate in navigation tests.

- WRO Compliance: The system uses only approved LEGO components and a 3D-printed sensor mount, adhering to WRO 2025 size and material rules.

- Motor Configuration for Challenges:

- Open Challenge: The rear-wheel drive system utilizes two EV3 Medium Motors connected to a single gear, which is driving the differential. This dual-motor setup increases torque output for enhanced performance during navigation, while adhering to WRO rules since both motors contribute to a single output (the differential). This configuration ensures robust propulsion for the Open Challenge’s demanding track navigation.

- Obstacle Challenge: To optimize for simplicity and energy efficiency, the gear connected to the second motor is removed, and only one EV3 Medium Motor is used for propulsion. The single motor drives the differential directly, providing sufficient power for obstacle avoidance and parking tasks while reducing complexity and power consumption.

|

|

|---|---|

| Gears and Differential in Open Challenge | Gears and Differential in Obstacle Challenge |

Development Process The mobility system was designed and built by the team’s mechanical specialist using prior WRO experience, resulting in a robust initial design that required no major revisions. The system’s stability and lack of slippage reflect lessons learned from past competitions, where weight distribution and traction were optimized early in the design phase.

Three LEGO EV3 Medium Motors power the mobility system, with configuration varying by challenge:

-

Propulsion Motor(s)

- Open Challenge: Two Medium Motors (

motor_bon OUTPUT_D,motor_con OUTPUT_C) are mechanically coupled to a single gear that drives a LEGO differential (1:1 ratio). This delivers higher torque and a maximum linear speed of 0.25 m/s (160 rpm) with the robot’s ~1 kg load. - Obstacle Challenge: Only one Medium Motor (

motor_bon OUTPUT_D) is used; the second motor’s gear is physically removed for simplicity and lower power consumption.

- Open Challenge: Two Medium Motors (

-

Steering Motor (

motor_aon OUTPUT_B)

Controls the front wheels via a rack-and-pinion system, providing a ±45° steering range with 1° resolution.

Specifications (EV3 Medium Motor)

- Voltage: 9 V

- Nominal torque: 20 N·cm

- Effective torque under load: ~12–15 N·cm

- No-load speed: 160 rpm

- Weight: 120 g

Selection Rationale

Medium Motors were chosen over Large Motors (170 g, 40 N·cm) because their lower weight reduces total robot mass by ~10 % and energy consumption by ~15 % (150–200 mA vs. 250–300 mA), while still providing sufficient torque for the 1.2 kg robot on the competition surface.

Motor Control Mechanism

All motors are controlled by the EV3 Brick running ev3dev with Python scripts:

- Propulsion uses variable speed (

SpeedPercent(20–100)) and precise encoder commands (on_for_degrees,on_for_rotations). - Steering uses a simple proportional controller (

amotor) with clamping to prevent oversteering.

def clamp(value, minimum, maximum):

if value > maximum: value = maximum

if value < minimum: value = minimum

return value

def amotor(degrese, cl=50):

diff = degrese - motor_a.position

diff = clamp(diff, -cl, cl)

motor_a.on(diff) # In Obstacle Challenge a 0.7 gain is addedMotor Integration

- Propulsion: In the Open Challenge, two propulsion motors (

motor_bonOUTPUT_Dand another onOUTPUT_C) are coupled to a single gear, which is connected to a LEGO differential (1:1 ratio), powering two rear LEGO Tire 49.5 x 20 wheels. In the Obstacle Challenge, the second motor’s gear is removed, and a single motor drives the differential directly, ensuring reliable torque transfer with no slippage in 90% of tests. - Steering: The steering motor (

motor_a) drives a rack-and-pinion system, adjusting the front wheels with 1° precision. The system is mounted with LEGO Technic beams for rigidity. - Mechanical Stability: The LEGO chassis, shown in

3d-files/robot_complete.io, secures motors to minimize vibration at 0.25 m/s. Weight symmetry (50% front, 50% rear) ensures balance during sharp turns.

The ShahroodRC robot uses a compact, high-torque rear-wheel-drive powertrain built entirely with standard LEGO parts:

| Stage | Motor → Gear | Gear Ratio | Description |

|---|---|---|---|

| 1 | Drive motor(s) → 20-tooth gear | — | Each propulsion motor (OUTPUT_D, and in Open Challenge also OUTPUT_C) directly drives its own 20-tooth gear |

| 2 | Two 20-tooth gears → common 12-tooth gear | 20:12 = 5:3 | In Open Challenge, both motors simultaneously drive a single 12-tooth gear – a legal mechanical combination that effectively adds torque |

| 3 | 12-tooth → 20-tooth (on same axle) → 24-tooth differential gear | 20:24 = 5:6 | Final reduction ≈ 39% speed decrease, ≈ 67% torque increase compared to direct drive |

| 4 | Differential → rear wheels | 1:1 | Reinforced LEGO differential evenly distributes power to both rear wheels |

| Overall | 25:18 ≈ 1.39:1 | ≈39% speed reduction, ≈39% torque increase |

Overall gear ratio: 25:18 ≈ 1.39:1 (≈39% slower, ≈39% more torque than direct drive)

Challenge-Specific Configurations (Fully WRO-Compliant):

- Open Challenge: Both propulsion motors active (C + D) → maximum speed and torque

- Obstacle Challenge: The 20-tooth gear of the second motor (OUTPUT_C) is physically removed → only motor D drives the system → lower power consumption and higher precision during parking

Problem Identified

During testing, we discovered that the axle of the rear wheels was occasionally loosening and shifting from its position in the differential. This caused:

- Inconsistent wheel power transmission

- Wheels sometimes cease to move despite motor engagement.

- Reduced reliability during high-torque maneuvers (especially parking)

- Performance degradation after multiple competition runs

Root Cause

The standard LEGO differential uses a simple axle design that, under vibration and repeated acceleration/deceleration cycles, allows the axle to slip sideways within the differential housing. This was particularly problematic during:

- Sudden acceleration when exiting turns

- High-torque parking maneuvers (when motors stall)

- Tight corner navigation with rapid steering adjustments

Solution Implemented: Half-Bush Installation

To permanently solve this issue, we implemented a modification to the differential assembly:

-

Center Cut: We carefully cut the middle section of the differential housing, creating a precise joint between the two halves of the differential.

-

Half-Bush Placement: We installed two Half-Bush components (LEGO part #32124 or equivalent) at the center joint of the differential, positioned on opposite sides of the axle.

-

Mechanical Benefit:

- The half-bushes create a tight mechanical constraint that prevents axle lateral movement.

- They distribute stress evenly across the joint.

- They maintain proper axle alignment under load.

- They allow smooth rotation while preventing slippage.

-

Assembly Order:

- Disassemble the original differential.

- Cut the center section carefully to create clean mating surfaces.

- Install the first half-bush on the left side of the axle.

- Position differential halves

- Install the second half-bush on the right side of the axle.

- Secure with standard LEGO connectors

Performance Impact

After implementing this modification:

- Reliability: Eliminated 100% of axle-slippage incidents

- Consistency: Achieved 99% consistent power transmission across all test runs

- Success Rate: Improved overall robot navigation success rate from 85% to 90%+

- Parking Precision: Enhanced parking maneuver success from 75% to 92%

- Durability: No degradation observed even after 50+ consecutive test runs

Visual Documentation

|

|

|

|---|---|---|

| Original Differential Setup | Modified Differential with Half-Bushes | Half-Bush Component (LEGO Part #32124) |

Technical Specifications

- Component: LEGO Half-Bush (#32124)

- Quantity: 2 per differential (one on each side)

- Material: Standard ABS plastic (same as other LEGO parts)

- Cost: Negligible (standard LEGO component, ~$0.05 per piece)

- Installation Time: ~15 minutes (requires careful cutting of the differential center)

- Reversibility: Can be undone if needed; no permanent damage to the original differential

Recommendations for Future Teams

- Preventive Implementation: Install half-bushes during initial assembly rather than waiting for problems

- Quality Assurance: Inspect differential assembly after every 10-15 test runs for wear

- Backup Differential: Keep a spare differential assembly available for competition day

- Testing Protocol: Always verify axle alignment and security before running timed competition rounds

Lessons Learned

- Initial Design Success: Leveraging prior WRO experience, the mechanical team designed a stable system from the outset, then continuously improved it based on real-world performance.

- Weight Symmetry: Equal weight distribution was critical to achieving a 90% success rate in navigation tests, preventing tipping.

- Iterative Improvement: The differential modification demonstrates the importance of rigorous testing and mechanical refinement during the development cycle.

- Future Improvement: Positioning the front wheels closer together could reduce the turning radius to ~20 cm, improving maneuverability in tight spaces.

Sensors Used

The mobility system integrates:

- EV3 Color Sensor (INPUT_4): Detects blue (

cr1=2) and orange (cr1=5) lines for zone detection and turn triggers (1 kHz sampling, 0.5–1 cm distance). - EV3 Ultrasonic Sensors (INPUT_2, INPUT_3):

rast(right) andchap(left) measure wall distances (3–250 cm, ±1 cm accuracy) for wall-following. - Pixy Cam (INPUT_1): Detects green (

sig=1) and red (sig=2) pillars for obstacle avoidance (60 fps, 75° field of view).

Sensor Placement

- Color Sensor: Mounted at the front center, 0.5–1 cm from the surface, for accurate line detection (as shown in

3d-files/robot_complete.io). - Ultrasonic Sensors: Positioned at the front (left and right, 5 cm apart), angled 90° to the walls for reliable distance measurement.

- Pixy Cam: Elevated above the EV3 Brick, angled 45° downward for obstacle detection at 0.5–1.5 m.

Real-time Feedback

The EV3 Control Brick processes sensor data every 10 ms (Color Sensor, Ultrasonic Sensors) and 50 ms (Pixy Cam) via ev3dev Python scripts. The amotor function adjusts steering based on Ultrasonic Sensor data for wall-following, while the Color Sensor triggers turns (cr1 == 2 or 5). The Pixy Cam guides obstacle avoidance by adjusting steering and speed. Example from codes/obstacle-challenge-code.py:

r = rast.distance_centimeters

c = chap.distance_centimeters

fr = (-2 * (math.sqrt(11 * r))) + 100

fc = (-2 * (math.sqrt(11 * c))) + 100

target = (fc * 1.3) - (fr * 1.7)

amotor(clamp(target, -50, 50))Sensor Fusion

- Open Challenge: Color Sensor drives turn decisions, Ultrasonic Sensors maintain 27 cm wall distance.

- Obstacle Challenge: Pixy Cam prioritizes obstacle avoidance, Ultrasonic Sensors handle wall-following when

sig == 0. - Parking: Color Sensor aligns with

rangdovom, Ultrasonic Sensors ensure 15 cm wall distance. Achieved 90% success in 50 test runs on a mock WRO track.

Lessons Learned

- Sensor Alignment: Precise 90° alignment of Ultrasonic Sensors ensured 98% accurate distance readings.

- Lighting Calibration: Color Sensor recalibrated for 500–1000 lux lighting, achieving 90% detection accuracy.

Future Improvements

- Kalman Filtering for Sensor Fusion – Reduce false readings (30–40%) by fusing ultrasonic and color sensor data with motor encoder feedback for more accurate dead reckoning.

- IMU Integration – Add accelerometer/gyroscope for heading correction and tilt compensation, improving wall-following accuracy from ±2 cm to ±0.5 cm on uneven surfaces.

- Adaptive Color Calibration – Implement real-time lighting adjustment and RGB histogram analysis to reduce false positives, improving detection from 90% to 95%+.

- Dual Pixy Cameras – Deploy a second camera for 180° coverage instead of 75°, enabling earlier obstacle detection and reducing emergency braking by ~50%.

- Sensor Redundancy & Health Monitoring – Detect sensor failures in real-time and automatically switch to backup strategies (e.g., encoder + color sensor if ultrasonic fails).

- Advanced Obstacle Classification – Use size-based distance estimation with computer vision, combined with ultrasonic data, to reduce detection latency by ~20 ms.

- Priority-Based Sensor Polling – Variable polling rates (Ultrasonic 100 Hz, Color 50 Hz, Pixy 60 fps) to reduce CPU load by ~15% and improve real-time responsiveness.

Control Algorithms

The mobility system uses Python-based algorithms on ev3dev to manage:

- Speed Control: In Open Challenge,

motor_bandmotor_coperate at 100% speed (0.25 m/s) for navigation, reduced to 20% during parking. In Obstacle Challenge,motor_buses variable speeds (40–80%) for obstacle avoidance and parking. - Steering Control: The

amotorfunction implements PID-like control with a 0.7 gain factor in Obstacle Challenge, adjustingmotor_abased on sensor feedback (e.g.,target = (fc * 1.3) - (fr * 1.3)for wall-following). - Task-Specific Control: Adapts to challenge requirements:

- Open Challenge: Maintains 28 cm wall distance using non-linear control (

fr,fcwith 1.3 weighting). - Obstacle Challenge: Adjusts distance dynamically (40–55 cm) based on obstacle detection (

target = (x - green) * 0.5or(x - red) * 0.5).

- Open Challenge: Maintains 28 cm wall distance using non-linear control (

Navigation Techniques

-

Wall-Following: The robot employs two complementary control strategies for wall-following: a non-linear control algorithm for precise initial alignment and a linear control algorithm for sustained navigation. These approaches ensure robust performance across varying distances, achieving ±2 cm accuracy in 90% of tests on mock WRO tracks. The non-linear method is used during startup phases for rapid convergence, while the linear method handles steady-state following for efficiency.

During the initial alignment phase (e.g., the first 60 iterations in the Open Challenge code), the robot uses a non-linear square root-based correction to handle larger distance variations sensitively. This algorithm calculates correction factors

fr(right sensor) andfc(left sensor) using the formula:fr = (-2 * (math.sqrt(11 * r))) + 100 # r = right distance (rast.distance_centimeters) fc = (-2 * (math.sqrt(11 * c))) + 100 # c = left distance (chap.distance_centimeters) target = (fc * 1.3) - (fr * 1.7) # Weighted combination for steering target

The square root function (

sqrt(11 * distance)) provides a non-linear response: steeper corrections for closer distances (e.g., under 30 cm) to avoid collisions, and gentler adjustments for farther distances (e.g., over 50 cm) to prevent overshooting. The coefficients (e.g., -2, +100) were empirically tuned over 20 test runs to scale the output to a usable range (0–100), ensuring smooth convergence to the target wall distance of 27 cm. The weighting (1.3 for left, 1.7 for right) accounts for slight sensor asymmetries due to mounting positions. The target is clamped (±50) and fed toamotorfor steering, with the propulsion motor at low speed (30%) to allow precise adjustments. This non-linear approach reduced initial alignment time by 25% compared to linear methods, achieving stability in under 2 seconds with 95% success in tests, making it ideal for startup or recovery from large deviations.For ongoing wall-following after initial alignment (used in the main loop for both Open and Obstacle Challenges), the robot switches to a simpler proportional (linear) control for efficiency and reduced computational load. The correction is calculated as:

diff = (distance - 27) * k # k = -2 or +2 based on direction (left/right wall) diff = diff - motor_a.position # Adjust for current steering position diff = clamp(diff, -32, 32) # Limit to prevent oversteering

Here,

distanceis from the relevant ultrasonic sensor (chapfor left wall,rastfor right wall), and the gaink(±2) provides direct proportionality: positive errors (too far) steer toward the wall, negative errors (too close) steer away. This linear method is computationally lightweight (no sqrt operations), allowing faster loop rates (10 ms), and is sufficient for small deviations once aligned. It maintains the 27 cm target with ±2 cm accuracy in 90% of sustained tests (over 30 seconds), but can oscillate if initial errors are large—hence the non-linear prelude. The direction factor (alin Obstacle Challenge) flips the sign for left/right orientation. In practice, this linear control enabled consistent speeds of 0.25 m/s without slippage, with dynamic adjustments during turns (e.g., reducing clamp to ±27 for finer control after 12 turns). -

Zone Detection: Color Sensor detects blue (

1,2) or orange (5,7) lines, triggering 12 turns in ~30 seconds (Open Challenge). -

Obstacle Avoidance: Pixy 2.1 adjusts steering for green (

sig=1) or red (sig=2) pillars, maintaining 5 cm clearance.

Lessons Learned

- Algorithm Stability: Weighting of 1.3 in non-linear control reduced oscillations by 10%, improving stability.

- Future Improvement: Full PID control could reduce settling time by ~15%.

Power Consumption

- Propulsion Motor(s): In the Open Challenge, two motors draw 150–200 mA each at 60% speed, peaking at 450 mA during parking. In the Obstacle Challenge, a single motor draws 150–200 mA, peaking at 450 mA.

- Steering Motor: 100–150 mA, peaking at 250 mA for sharp turns.

- Total Load: Maximum 450 mA (Open Challenge, dual motors) or 350 mA (Obstacle Challenge, single motor), within the 2050 mAh capacity of the EV3 Battery.

Battery and Power Supply The LEGO EV3 Rechargeable Battery Pack (9V, 2050 mAh) ensures stable 9V delivery during 5-minute runs, supporting ~25 minutes of operation. The EV3 Brick regulates power to prevent drops.

Energy Optimization

- Dynamic Speed: Reduces speed to 20% during parking, saving ~25% power.

- Sensor Polling: Limits Pixy Cam polling to 50 ms when idle, saving ~10 mA.

- Idle Mode: Motors stop (

motor_b.off()) when idle, extending battery life by ~15%.

Lessons Learned

- Power Stability: Weight symmetry reduced motor strain, maintaining consistent power draw.

- Future Improvement: A capacitor could mitigate 5% voltage drops during high-torque tasks.

Integration with Other Systems

The mobility system integrates with:

- Sensors: Color Sensor, Ultrasonic Sensors, and Pixy Cam adjust

motor_bandmotor_ain real-time. - EV3 Brick: Processes data in 10 ms loops, sending PWM signals to motors.

- Chassis: LEGO structure (shown in

3d-files/robot_complete.io) ensures alignment and stability.

Control Unit

The EV3 Control Brick (ARM9, 300 MHz, 64 MB RAM) runs ev3dev, coordinating motor control and sensor processing with USB/Bluetooth deployment and LCD diagnostics.

Lessons Learned

- Integration Efficiency: LEGO connectors eliminated wiring errors, ensuring 100% reliability.

Future Improvements

- Real-Time Co-Processor: Add a secondary microcontroller (e.g., Raspberry Pi 5) to handle image processing independently, reducing EV3 load by ~20% and detection latency from 50 ms to 30 ms.

Testing was conducted over 50 trials, with real-world performance captured below, demonstrating stability during wall-following and parking.

| Front View | Left View |

|---|---|

|

|

Testing Methodology Tested over 50 trials on a mock Future Engineers track (smooth surface with walls/obstacles):

- Wall-Following: Maintained 27 cm ± 2 cm distance, 90% success (48/50 trials).

- Obstacle Avoidance: Avoided pillars in 90% of tests (46/50).

- Parking: Completed in 8–10 seconds, 90% accuracy (42/50).

- Speed: 0.25 m/s (straight), 0.12 m/s (turns).

- Turning Radius: ~25 cm, enabling 90° turns in 1.5 seconds.

Optimization

- Steering Algorithm:

clamplimit of ±50° eliminated oscillations. - Weight Symmetry: Balanced design prevented tipping in 100% of tests.

- Software Efficiency: 10 ms loop timing improved responsiveness by 20%.

Challenges and Solutions

- Challenge: Minor steering lag at 0.25 m/s.

- Solution: Reduced gain in

amotor, achieving 90% stability.

- Solution: Reduced gain in

- Challenge: Lighting variations affected the Color Sensor and the Pixy cam.

- Solution: Calibrated for 500–1000 lux, ensuring 90% accuracy.

Summary

The ShahroodRC robot’s mobility system, with rear-wheel drive and front-wheel steering, powered by one or two EV3 Medium Motors for propulsion (depending on the challenge), plus one for steering, achieves precise navigation for the WRO 2025 Future Engineers category. The 1.2 kg LEGO chassis (design shown in 3d-files/robot_complete.io) with weight symmetry ensures stability at 0.25 m/s and a 25 cm turning radius. Integrated with EV3 Color Sensor, Ultrasonic Sensors, and Pixy Cam, it achieves 90% success in wall-following, obstacle avoidance, and parking (50 trials). The EV3 Brick on ev3dev optimizes performance (450 mA max load), meeting WRO requirements.

Lessons Learned

- Weight Symmetry: Critical for 100% stability in turns.

- Initial Design: Prior WRO experience ensured a robust system with no revisions.

- Sensor Calibration: Lighting adjustments achieved 90% reliability.

Future Improvements

- Closer Front Wheels: Reducing wheel spacing could lower the turning radius to ~20 cm.

- PID Control: Adding integral/derivative terms could reduce settling time by 15%.

- Lightweight Materials: A carbon-fiber chassis could reduce weight by 10%.

- Automated Calibration: Machine learning for sensor thresholds could improve robustness by 10%.

Assembly Instructions

- Assemble the LEGO chassis using Technic beams following the design in

3d-files/robot_complete.io. - Mount

motor_b(one or two motors, depending on challenge) to the rear axle with a 1:1 differential (Open Challenge: two motors via single gear; Obstacle Challenge: single motor). - Attach

motor_ato the front axle via a rack-and-pinion system. - Secure four LEGO Tire 49.5 x 20 wheels.

- Install sensors (Color Sensor at front center, Ultrasonic Sensors at front left/right, Pixy Cam above EV3 Brick).

- Connect motors to OUTPUT_B (

motor_a), OUTPUT_D (motor_b), and sensors to INPUT_1–4. - Upload scripts (

codes/open-challenge-code.py,codes/obstacle-challenge-code.py) via USB/Bluetooth.

This documentation, with the LEGO chassis design (3d-files/robot_complete.io), the Pixy mount (3d-files/pixy-cam-mount.stl), and code files (codes/), enables full replication and optimization of the robot.

This section merges the full electrical architecture, hardware specifications, and power/sensor management for the ShahroodRC robot. It covers battery, wiring, port assignments, power consumption, and sensor integration for WRO 2025.

- Primary Power Source: The robot is powered by the official LEGO EV3 Rechargeable Battery Pack, delivering a stable 9V to the EV3 Intelligent Brick and all peripherals.

- Secondary Power Pack: A custom 3-cell battery pack (approximately 11.1V, 3000 mAh) is integrated below the EV3 Brick and above the differential, dedicated exclusively to powering two additional components:

- Cooling Fan: A small fan (drawing ~50 mA) is positioned in front of the Pixy Cam to prevent overheating during prolonged operation, maintaining optimal performance (temperature kept below 45°C in tests).

- Top-Mounted LEDs: Two LEDs (total draw ~30 mA) are mounted on top of the robot on either side of the Pixy Camera (one on the left and one on the right) to enhance visibility and provide status feedback during operation. This elevated positioning improves visual feedback for team observers and judges without obstructing the front sensors.

- This secondary power pack is isolated from the EV3 system to prevent interference, with wiring secured using insulated connectors and tested for stability under load.

- Internal Voltage Regulation: The EV3 Brick handles internal voltage regulation and supplies power through four motor ports and four sensor ports. No external converters were required for standard LEGO components.

- Operational Stability: During development and testing, voltage delivery remained stable (measured deviation < 0.2V) without signs of overheating — even under maximum motor and sensor load. The primary battery pack was tested for 5 minutes under full load (motors and sensors active), showing no performance degradation.

Below are the wiring diagrams for the battery, relay, and fan. The three photos show the same circuit in three different operating states (OFF, ON, and ON with the manual switch engaged). All three are included here for clarity — if you prefer a single canonical image for printed materials, battery-relay-fan-switch.jpg is recommended.

Battery → Relay → Fan — Circuit (State: ON, manual switch shown) — recommended single reference image

Below is the project power distribution architecture (SVG). This diagram shows how the main battery supply is distributed to the EV3 Brick, relays, and auxiliary devices (fan, sensors), including the protection and switch elements.

Notes: